Microsoft’s new clustering technology is taking the industry by storm — NT professionals widely implementing the product into their networking environment. Clustering is an imperative for NT to succeed in the large-scale, business critical market, however it remains poorly documented and difficult to configure. Although MSCS is an extremely immature product it is expected to play a key role in analyst’s predictions that NT will surpass UNIX as the universal NOS of choice.

Mmm, so much has changed since then or has it?

Update:

It’s been brought to my attention that I might have been a little “harsh” on Microsoft’s validation tool. To be fair to Microsoft apparently “warnings” in the validation report do NOT mean the Failover Cluster wouldn’t be supported. They’re just that warnings – that their might or might not be problem. I guess its up to the administrator to interpret these warnings and decide if they are show stops or not.

The other thing that’s become apparent is the validation tool itself hasn’t been recently update to reflect Windows Hyper-V 2012 native support for NIC teaming. So my team was fine after all…

Disclaimer:

The post was actually written before I started using the SCVMM “Logical Switch” as such it makes references to Standard Switches and the NIC Teaming available at the Windows Hyper-V. Such were problems with getting Windows Failover Clustering to work, I had keep troubleshooting it on the backburner whilst I worked on learning something new. I couldn’t allow my problems with Failover Clustering to hold me back. Additionally, this article started its life as Windows Hyper-V 2012 R2 PREVIEW post, but it took so long (days, weeks, months…) to resolve the errors it ended up being finished on the RTM release that shipped recently to TechNet/MSDN subscribers – and that RTM release did indeed fix my storage problem that seemed to be the heart of one of my problems….

Edited Highlights:

- Although SCVMM has the capacity to create a cluster, I consider this high risk, as it assume everything is working perfectly and all your pre-reqs are met. I found using the Failover Cluster Manager console was a safer bet as you could check that everything was in order before adding the cluster to SCVMM

- The right-hand doesn’t know what the left hand is doing – I’ve had situations where the Failover Cluster Manager validate claims I have no network redundancy (which it’s clear I do). It’s like the Failover Clustering software isn’t aware of Windows Hyper-V native NIC teaming capabilities.

- Networking is made more complex by the fact you have navigate Windows Server 2012 own networking stack which originally was designed for an operating system. So you have situations where IP addresses are being assigned (via DHCP) to network cards which will be dedicated to the VMs. It makes things complicated as validation wizards use these IP address to confirm the correct network communications paths.

- HomeLabbers Beware – if you have limited number of NICs you will struggle to configure the same setup as you might with vSphere AND still get passes on validation wizards from Microsoft. I have 4 gigabit cards in my servers and I struggled. If you’re setting up Windows Hyper-V for tyre kick purposes you may well need to sacrifice network redundancy to your VMs, storage or management network. I would recommend ignoring those errors, as you’re unlikely to be getting support from Microsoft anyway…

Introduction

I first came across Microsoft Clustering back in the mid-90s. Back then, I was a newly qualified MCSE and MCT – but there was a lack of demand and resources (hardware requirements), and so I didn’t get my hands dirty at all. If you wanted to do clustering then you would be looking at a JBOD with a shared SCSI bus (I once owned a DEC JBOD that could do precisely this – I can count myself one of the small number of folks who has configure VMotion using a DEC JBOD using Dell 1950 running on Pentium III processors!!!), a bit later on OEMs like HP started to offer cluster packages based on two DL-3Somethings and a MSA1000.

Needless to say this sort of hardware was beyond the finances of most training companies I worked for, to run a Microsoft Clustering course once a year…

It wasn’t until 2003/4 when I became a freelance VMware Certified Instructor (VCI) that I could start to use the technology. That’s because virtualization made clusters so much easier to get up and running. That’s true even now – any relatively skilled person using either vSphere, Fusion or Workstation could create a “Cluster-in-a-box” scenario without needing to meet exorbitant hardware requirements. It might interest you to know that in those early ESX 2.x courses we actually walked students through this configuration. Back then ESX.2x/vCenter1 had no “high availability” technology, that didn’t get introduced until VMware Virtual Infrastructure 3.x (Vi3). At the time instructors like myself would demo either Windows 2000 or Windows 2003 cluster pairs. I remember that first experience of setting up a Microsoft Cluster. Generally, the first node would normally go through without a problem; it was always the second node you worried about. I would sit at my desk whispering under my breath “go on, go on you [expletive deleted], join the cluster”. Hoping that through the sheer will of profane words, the technology would work. You see even in 2000/2003 days the requisites (both in software and configuration) for Microsoft Clustering were quite convoluted…

At the time I didn’t think Microsoft would seriously become committed to virtualization. In 2003/4 when I told former colleagues of mine (who had gone on to work for Microsoft in the 90s) that I was getting to this new fangled thing called VMware virtualization – most of them rather scoffed. They regarded it as an interim technology that would be used to run legacy systems (like our old friend NT4) on new hardware. They didn’t think that virtualization would be the de facto approach to running server workloads. But, heck I’m no visionary myself (I just got lucky backing the right horse in the race), and these folks probably won’t be alone in their “Road-to-Damascus” conversion to virtualization. Saul has definitely become Paul, in recent years. So, with this background it was with quite a lot trepidation that came to look at what’s now called “Failover Clustering” with Windows Hyper-V 2012.

It worth saying that a cluster can be created directly in SCVMM, and although this does have the option to validate the settings on the Windows Hyper-V servers at the same time – its some what of high risk strategy in my book. Everything about the configuration of the servers must be correct for it to work successfully…

Installing & Verifying the Cluster

As with all things Windows, Failover Clustering is not built-in – it’s yet another one of those darn roles you have to install on each Windows Server 2012 Hyper-V R2 Cluster node.

Once again Microsoft is showing its “Operating System” centric view of the world. In VMware you create a cluster in vCenter (not at the hypervisor level), and then merely drag-and-drop vSphere hosts to the cluster, this triggers an install of the HA Agent (or to be more accurate the “Fault Domain Manager” – FDM) without you ever having to log into a host. Pretty quickly in vSphere a collection of hosts in vCenter just becomes a block of CPU and Memory to which you can deploy VMs. With Microsoft you have a dedicated console for managing the Failover Cluster. I must admit the number of consoles, control panel applets and MMCs I’ve had to use so far to get even this far is racking up.

The Failover Cluster MMC comes with a “Validate Configuration” option that I thought was probably wise to use, before trying to do any real work. Knowing how many prerequisites are required to stand a Microsoft cluster in the past was pretty high. I wanted to make sure I covered my back before I did anything serious.

The wizard allows you to add as many Windows Hyper-V hosts that you have (I’ve got just two sadly), and if you select the option “Run only the tests I select”, you can see just how many settings the wizard validates on. I’m sure the customers who use Microsoft Hyper-V must really appreciate this utility. Imagine trying to validate all those settings manually, lets hope most of them are defaults!

This utility could be massively improved by allowing the admin to re-run it over and over again as they work to resolve the errors. As it is, each time you have to manually add each host, and run it again and again. You can deselect components – so that does allow you to be systematic.

I focused on resolving say “Hyper-V Configuration” errors first, then move on to the next category. Running a full-test on just two hosts this took about 2mins (the book I’m reading states it can be as much as 10mins, and I figure they are basing this on a large environment). I’m not sure how long this validation process would take if I had a fully populated 64-bit node Windows Hyper-V cluster. I’d be interesting hearing stories from the community. I suspect a perfectly configured set of host to take less time – as often there are timeouts and retries with these sorts of tests. If you are doing repeated test in effort to check your changes you might prefer to use the PowerShell command:

test-cluster –node hyperv01nyc, hyperv02nyc

Sadly, my hosts failed some of the tests, and issued warnings on others. So my job for the day was to work through these validations warnings and resolve them myself.

Now, I want to be 100% fair to Microsoft on this – many of the settings were caused by:

- Human Error – Like an admin I make mistakes – and indeed some of those errors could equally occur if I was using VMware vSphere.

- Human Ignorance – I freely admit I’m new to Windows Hyper-V and as such I’m inexperienced.

With that said its interesting that vast majority of my major problems were around network and storage – two parts of Microsoft Clustering that have always been a challenge since Windows NT4 days.

I’m going to document all these issue because that’s what I do. I document all my failures and errors with VMware technologies. My theory has always been if I make a mistake, then there’s a good chance that others will too. We are all human, and human error accounts for 99% of the problems in life in my view. That’s why we have computers to do so much stuff, because they aren’t prone to human error – just computer errors generated by humans who programmed them. It’s my hope that users of software search on Google for solutions to their problems and they stumble across my content. It means I feel good because I’ve helped someone, and that hasn’t done my career any harm in the last decade.

Hyper-V Settings: Consistently Named vSwitches

My first error was a classic one because I’ve seen students (and I admit occasionally myself) name portgroups inconsistently even in vSphere Standard vSwitches. If you’re fortunate to be using VMware Distributed vSwitches you would never see this, but with Standard vSwitches – then you have to be consistent. Sure enough I had mislabelled the vSwitches. Fortunately, I have only two Windows Hyper-V hosts so it didn’t take long to use Hyper-V Manager to fix this problem.

A re-run of the Validate Configuration led to every SysAdmin’s wet-dream of green ticks everywhere!

So I repeat this pattern for all the rest of my red X’s where they appeared in the report.

Networking: NIC Teaming

The next warning/error I had concerned network communication – essentially this part of the test validates the networking layer. As you might recall I played around with Microsoft’s first ever attempt to do native NIC team without the need of the safety net of OEM drivers from the likes of Intel/Broadcom. I setup what I thought was a classical design – two team NICs for management, and two teamed NICs for my VMs.

From what I can fathom, its like the Failover Clustering Validation Tool isn’t aware of this configuration, and thinks I could have no network redundancy. Of course, this is pretty important to stop such things as isolation and split-brain. That’s when the cluster thinks a node is dead to the world, when it’s actually still alive – merely because it isn’t reachable across the management network. I guess if you’re a Microsoft customer you used to this sort of thing – one of the problems with these sort of oil-tanker style Mega-Software-Vendors after all, is getting everyone to be on the same page. This is a problem that VMware could well do learning from and avoiding at all costs – staying lean and mean is the way to remain competent and speedboat-like when it comes to innovation and quality.

Here’s the warning:

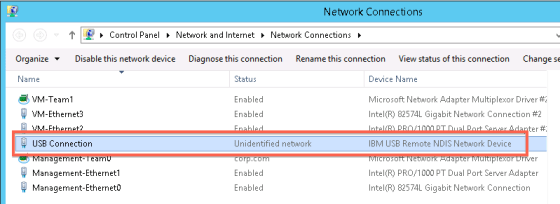

The red stuff concerns the system trying to test an IBM USB “network connection” that only appears when Windows Hyper-V is installed to my Levono TS200’s. The Validation Configuration wizard appears to mistakenly think this is a genuine connection when it isn’t. I’m thinking perhaps the best way to resolve this issue is to merely disable this unwanted device – and perhaps then the validating wizard will ignore it. As it turns out I had disabled this interface on one Windows Hyper-V node but not the other. Disabling the device on all my hosts successfully removed the bogus warning.

The other warning message reads:

Node hyperv02nyc.corp.com is reachable from Node hyperv01nyc.corp.com by only one pair of network interfaces. It is possible that this network path is a single point of failure for communication within the cluster. Please verify that this single path is highly available, or consider adding additional networks to the cluster.

It’s like the teaming layer so perfectly gives the appearance of a single NIC (when in fact its backed by two independent NICs on different physical switches) that the cluster validation layer thinks it could be a single point of failure. It’s a classic case of the “left-hand,” the “Failover Cluster Team,” not knowing what the “right-hand,”the “NIC Team,” are up to.

Sadly, there’s no way to really tell the validation tool that everything is in fact OK. The best option is to disable that part of the test altogether.

In a similar way VMware vSphere will carry out validation checks when you create a HA/DRS cluster – and if there is no redundancy to the management layer you will see warnings and alerts. There’s no special “validation” tool as such, VMware just checks the cluster against the requirements that have not been met.

What nice about VMware’s HA/DRS clustering is that NIC teaming has always been a feature – even before VMware vSphere had a clustering feature, and so it understands that two NIC’s backing a management port means you have redundancy (assuming they are plugged into different physical switches of course!)

I’m not a fan of just bypassing tests just to get a green tick. So I decided to do some experimentation. I decided to reconfigure my iSCSI networking. So rather than using a single NIC Team – I would have two teams. One for management called Management-Team0 and one called Storage-Team1. This would mean that the iSCSI network would have two distinct teams and physical cards to take the network traffic. I thought with both servers seeing both the management network and the storage network this would be counted as redundancy. Of course that was cheating a little bit – after all you can’t configure 3 NIC Teams for 4 network cards server! Think about that for a second… 😉

I re-ran the Validate Configuration wizard once more hoping that my network configuration would pass this time. But I was right out of luck. It seems like the Validate Configuration wizard detected that “Storage-Team1” was being used for iSCSI communication, and doesn’t allow it be used for management redundancy.

I seemed to be blocked at every turn. The validator refused to accept my Microsoft NIC Team as offering redundancy, and I could not reuse the storage network. It seems like the ONLY way to pass this requirement is to have two dedicated NICs one for management and one for heartbeat. This “heartbeat” network just used 10.10.10.0/24 as range and doesn’t speak to anything on the network except the each other. This means my hyper01nyc and hyper02nyc hosts were ping able via 192.168.3.x, 172.168.3.x, and 10.10.10.x. This did also mean I had to sacrifice my redundancy to the storage as I had run out of physical NICs to do anymore teaming – but hey, at least I hoped the validation wizard would be happy! Sadly, that was not be… It appears that it imposes a packet loss test as well, so if a NIC interface has more than 10% packet lost it fails the test. I did wonder with ALL the network changes I’d been making – that this might have actually been the source of the packet loss in the first place.

Sure enough with ping –t command I could see packet loss was occurring. Interesting this packet loss was between the two Hyper-V Server (192.168.3.x and 10.10.10.x) but not to the storage layer (172.168.3.x).

It did occur to me that Windows might not like a NIC Team where there was actually only one physical NIC backing it. I decided to abandon the whole idea of having a NIC Team except for my virtual machine networks, and instead go back to bog standard NICs. It would take a little time to do this because I would be remotely using Microsoft RDP to do this as my servers lack ILO/DRAC/BMC boards.

So I removed both the Management-Team0 and the Heartbeat-Team1. That was a bit hairy because it resulted in a Microsoft RDP disconnect, but when the teams were removed I did eventually get a connection back. The primary IP address for my Management-Team0 was restored to the Ethernet0 interface – and the reconnect feature of Microsoft RDP reconnected me back to server. I was in luck – no need to take a drive to the colocation. Sadly, I did also loose my IP configuration to the iSCSI Storage that was backed by the team – but it didn’t take long to reconfigure it again. Fortunately, there wasn’t anything running on the iSCSI volumes I had there, so I could just reconnect them using the iSCSI Initiator applet.

Once I had undone all the work I had previously carried with NIC Teaming – the ping tests passed, and I was getting no lost packets. It kind of makes sense that NIC Team without only one physical NIC present is going to cause problems. So I was able to get a successful pass from the “Validate Configuration” wizard on the category of “Validate Network Communication”.

But only at the expense of having no network redundancy to my management and storage networks – it appears I simply don’t have enough NICs to both meet the Microsoft requirements and my own. I would need to either buy another dual-port NIC and hope I didn’t run out of Ethernet ports on the physical switch – or remove redundancy from the virtual machines, to offer greater redundancy to the Windows Hyper-V Server. My life would have been much easier if Microsoft Failover Clustering software just recognised its own NIC Teaming layer. I guess could just have lived with the fact that Microsoft own validator doesn’t validate my network configuration properly. But I’d be worried about the support. By doing this I created another problem which was an MPIO error for the iSCSI connections:

So on a 4-NIC box I felt more torn than I would be with VMware ESX. As I tried to meet the validators requirements in one area (networking) I caused errors elsewhere (storage). In the end I disabled MPIO altogether (after all I had lost my NIC Team) that would make it work.

Networking: TCP/IP Error

The other warning I had concerned the IP configuration on the two nodes. It seems as if my “VM-Team1” IP stack was either picking up IP addresses from my DHCP server that’s on that network, or alternatively wasn’t picking up an IP address at all.

The exact error message read like this:

Node hyperv02nyc.corp.com has an IPv4 address 169.254.58.231 configured as Automatic Private IP Address (APIPA) for adapter vEthernet (vSwitch0-External). This adapter will not be added to the Windows Failover Cluster. If the adapter is to be used by Windows Failover Cluster, the IPv4 properties of the adapter should be changed to allow assignment of a valid IP address that is not in the APIPA range. APIPA uses the range of 169.254.0.1 thru 169.245.255.254 with subnet mask of 255.255.0.0.

This error message seems to be somewhat bogus to me. After all it’s not the physical NICs of my “VM-Team1” – it’s the NIC Team that should have an IP address. It’s the VMs that sit on that NIC Team backed by virtual switch. In VMware vSphere the only part of the hypervisor that needs an IP address for internal infrastructure communications – Management, VMotion and Fault-Tolerance. Then the only IP stack present – would be the one within the VMs guest operating system.

I thought the easiest way to get rid of this warning would to disable the TCP stack on the NIC team. However, that had already happened by virtue of the teaming process. It turned out the problem was caused by a misconfiguration by myself. When I created the virtual switch called “vSwitch1-external”, I’d forgotten to disable the option to “Allow management operating system to share this network adapter”.

In VMware vSphere no such concept exists, as we feel that there should never be a case where the underlying hypervisor should really need to speak to the VMs using standard networking. If you did want a VM to speak to VMware vSphere and back again – you would either need a virtual machine portgroup on the management switch, or you’d need to enable a route between the two discrete networks. Personally, I think that Microsoft exposing this option to SysAdmin is dangerous especially as they have deemed to make this a default. If you want this sort of configuration it should be possible, but in such away that you really understand the consequences of your actions. As ever an endless diet of clicking “next-next-next and finish” can lead to suboptimal configurations – that’s true for ALL vendors. That’s why a SysAdmin should and could engage their brains; you cannot rely on any vendor to get their defaults right 100% of the time. And if I’m brutally honest that includes VMware as well. There I said it. Through gritted teeth.

If you enable this “sharing” then what Windows will do is create a kind of bogus logical interface called in my case “vEthernet (vSwitch0-External)”. It’s this interface that is attempting to get an IP address and failing.

Removing the “sharing” option on the virtual switch, removes this bogus interface and the validation configuration wizard now passes without an error or warning (assuming I ask it to ignore the NIC Team “feature”).

Woohoo! By now it was beyond lunchtime so I took a bit of a break. I figured if I manage to get a 2-node cluster up and running by the end of the day, that could be consider some sort of success. I later discovered I was being wildly optimistic.

StoRAGE: Quorum Witness

By now I realised it was probably time to re-run the validate configuration against my storage. After all there’d been some networking issues which I resolved, and perhaps some of the warnings were bogus ones or had been resolved by my other actions. I guess my confusion and anxiety comes from having it so easy with VMware. Since ESX 2.x (circa 2003/4) VMware have always had a cluster file system called VMFS. No special software or service is needed to make this file system available to multiple vSphere hosts. You just present your LUN/Volume with either ISCSI or FC, and format. Job done. A little bit later in the lifetime of VMware around the ESX 3.x era, VMware started to support NFS as protocol. So now we just refer to these as “datastores” which you pick for the purpose you need (capacity, performance, IOPS, replication and so on).

I had a number of warnings about storage, and to be honest I wasn’t really surprised. I do find Microsoft’s approach to storage a little bit confusing coming off the back of using VMware for so long, but also from those early experience of setting up clustering in Windows2000/3 days. Back then you had to be careful not to bring disks online until you were 100% sure that all the nodes were part of the cluster, otherwise you could get all manner of corruption issues. I assume that is a thing of the past – but I wasn’t prepared to take any risks when it comes to data. So I have two Windows Hyper-V nodes with access to a iSCSI volume on a Dell Equallogics – but I have not brought it online or formatted it yet – as I figured the Failover Cluster might handle the process of enrolling the disk, making a “Cluster Shared Volume” (CSV) and then formatting to make them available to multiple hosts. It turns out that this is not the case. The disk needs to be brought online one of the Windows Hyper-V nodes, initialized and formatted before creating a cluster for the first time. This is then repeated on each server, with care being taken to make sure the drive letters are consistently assigned.

One thing became clear is that the “Validation Configuration” tools does not check whether you have presented a “Quorum Witness”. If you do not supply some sort of storage (either a share or a volume) for this process otherwise when you go to create the cluster you will get errors and warnings about this:

A Quorum Witness is used in the “voting” process when a Window Hyper-V Server fails. You can either have a small volume present to all the hosts to act as the quorum or else you can use shared location that is accessible to all the hosts. A quick Google (have you notice how the word “Google” is usurping the word “search” – its insidious!) brought up this rather length explanation of the role of the Quorum Witness:

http://technet.microsoft.com/en-us/library/jj612870.aspx

I’d have to say much of this content is quite heavy going, and its written in that kind of computer speak where a sentence makes so many references to concepts that are taken for granted, it qualifies as a register in it own right. Try this opening statement on for size.

The quorum for a cluster is determined by the number of voting elements that must be part of active cluster membership for that cluster to start properly or continue running. By default, every node in the cluster has a single quorum vote. In addition, a quorum witness (when configured) has an additional single quorum vote. You can configure one quorum witness for each cluster. A quorum witness can be a designated disk resource or a file share resource. Each element can cast one “vote” to determine whether the cluster can run. Whether a cluster has quorum to function properly is determined by the majority of the voting elements in the active cluster membership.

Don’t worry, it you had to read that twice or three times before you got it – you’re not alone. However, by my reckoning the requirement was a simple one – I needed an additional volumes/LUNs presented to the Windows Hyper-V servers. These needn’t be huge in size, because they merely hold the data required for the cluster to confirm it has a quorum. It’s a funny old term “quorum”, but I do remember it from my university days. It was used to stop voting procedures due to a lack of members of the student union present. Without a quorum new policies and procedures could not be passed.

I had to fire-up my manager of the Dell Equallogic Array to create two volumes/LUNS and present them to my hosts.

Remember merely rescanning disks in Computer Management is not enough with Windows Hyper-V. If your running iSCSI you must do a rescan with the iSCSI initiator first. This is s two-step process – first clicking the “Refresh” button, followed by using the “Connect” button to establish a session to each iSCSI target.

I’d have to repeat and rinse this for every Microsoft Hyper-V server in my environment. At this point I was beginning to feel quite grateful that I only had two Windows Hyper-V servers to manage!!! You see, there have been some external studies that demonstrate that Windows Hyper-V is more labour intensive to manage that VMware vSphere. I was pretty sceptical about that, but the more I immerse myself in the Microsoft world the more I think its true. If this had have been vSphere – a right-click of the datacenter or cluster object in vSphere would allow me with a single-click to rescan an entire block of hosts.

StoRAGE: Boot Disks and SCSI-3 Reservation

Note: All of my problems with storage were resolved by wiping the Windows Hyper-V R2 Preview from the hosts two weeks ago, and installing the Windows Hyper-V R2 RTM that became available to TechNet/MSDN subscribers. Nothing about my configuration had changed.

I guess all I could do with the validation configuration wizard is work through each of the warnings – decode and try to resolve them.

It was time to take another break. Make a cup of coffee and sit down with one of my books to read the “Failover Clustering” chapter and get this whole CSV and storage requirements in my head. I was surprised in the books I had bought how little attention is paid to the storage configuration. Perhaps they are assuming that the readers are Windows Admins who probably know clustering pretty well already?

This did resolve some of my error messages, but also generate newer nastier looking ones as well.

In this case parts of the test were being cancelled because a much bigger failure was causing other parts of the test to be invalid. The precise failure was on SCSI-3 Persistent Reservations.

I realise the graphic here might be difficult read so here’s what they say exactly:

Node hyperv01nyc.corp.com successfully issued call to Persistent Reservation RESERVE for Test Disk 0 which is currently reserved by node hyperv02nyc.corp.com. This call is expected to fail.

Test Disk 0 does not provide Persistent Reservations support for the mechanisms used by failover clusters. Some storage devices require specific firmware versions or settings to function properly with failover clusters. Please contact your storage administrator or storage vendor to check the configuration of the storage to allow it to function properly with failover clusters.

Node hyperv01nyc.corp.com successfully issued call to Persistent Reservation RESERVE for Test Disk 1 which is currently reserved by node hyperv02nyc.corp.com. This call is expected to fail.

Test Disk 1 does not provide Persistent Reservations support for the mechanisms used by failover clusters. Some storage devices require specific firmware versions or settings to function properly with failover clusters. Please contact your storage administrator or storage vendor to check the configuration of the storage to allow it to function properly with failover clusters.

From what I can tell this is another bogus warning. The error concerns test disk 0. Disk0 is my “Management OS” boot partition that I would not want to be part of the Failover Cluster. It seems like the validation configuration cannot exclude the Windows Hyper-V layer from the test. The fact that disk1 appears is kinda reassuring – but I can’t help wishing the other tests weren’t cancelled because of this. I was so concerned about this warning I decided I had to hold off creating a cluster. Perhaps it was hardware issue with my Lenovo TS200’s – it could be I need a driver update for them to stop this error occurring. It’s not an issue I’ve ever had to worry about with VMware vSphere where this sort of issue is not a concern.

Some googling around appears to suggest that these errors might be caused by the Validation Configuration tool wanting to confirm the setup for a new feature Windows Hyper-V called “Storage Spaces”, and that this maybe a misleading error in the cluster validation wizard. Given that message explicitly references speaking to your storage administrator (that’s me, and what do I know?!?) or your “storage vendor”, I contacted my friends in Nashua, New Hampshire in the Dell Equallogics business unit.

I worked very closely with Dell Equallogics – who were incredibly patient and incredibly helpful. Words fail me (which is know is unusual for me!) but without their help I wouldn’t have got very far. With their assistance I was able to do firmware updates to their latest and greatest 6.0.6 code. This didn’t resolve the problem with the “Preview” release of Windows Hyper-V R2. My problems with storage didn’t end until two weeks ago when the RTM version of Windows Hyper-V R2 finally shipped.

Conclusion:

The best I could come up with was this result for a test – using the default complete test.

As we saw earlier the “warning” comes from the validator not understanding the fact I’m using Microsoft Teamed NICs, and the failure comes from it trying to validate the boot disk, and find it unsuitable.

I do understand the point of these validation tools, and in the main I approve of them. Although you have to wonder how complicated a piece of software has to be to justify for a vendor having to write another piece software to check that all the pre-requisites have been met. But what really irks me is a validation tool that doesn’t really understand what it is validating. That creates false concerns where none should exist. With that said, the validator did pick up on some misconfigurations that were of my own making, and for that I am moderately grateful.

Occasionally, I’ve debated with folks about the rights and wrongs of these sorts of validation tools. Those in favour often use the analogy of the validation that Big Pharma and Airline industries go through to confirm their work is good. I see the analogy, but I’m not sure that the software industry needs or desires the level validation – that requires 10 years to develop a new drug or an airplane. Even with these levels of due diligence in the production process – mistakes still take place – as with the batteries on the new Boeing Dreamliner or contraception pills that contain placebo. In my view software should be so easy to setup you don’t need validation tools. The requirements should be clear and easy to meet – and the validation should be triggered only if you don’t meet those. Your environment can and does change – so you should get alerts if the cluster loses its integrity – say if you have a NIC failure or the storage doesn’t mount correctly.

By now it was 3.50pm in the afternoon (in reality it took me weeks to get this far, but that’s more indicative of how busy I am with other work), and I had some 16 validation reports located in the /temp directories – each one was associated with me making configuration changes to get a green tick next every category. It was time to bite the bullet and actually try creating my first failover cluster for Windows Hyper-V, and that as they say that is another story….

I must admit I’m really grateful that I don’t have an existing Windows Server 2012 Hyper-V SP1 cluster that I’m trying to migrate to the R2 Preview – the process looks horrendous, and something you just wouldn’t have to do with a VMware HA/DRS cluster.